Field Operators responding to disasters or attacks often have torely on distributed data streams to make instant decisions on where to focus or deploy their response. Disasters produce complex, multimodal data like text reports, sensor feeds, geospatial maps, incident logs, etc. This multi-modality adds to the complexity of analyzing data in real time. Moreover, this data is often siloed, inconsistently formatted, and updated dynamically. The main goal of this project is to reduce manual effort required to coordinate disaster response via phone or email and provide actionable reasoning results to field operators. The key aims include:

- Aim 1: Integrate FEMA’s datasets and other sources (e.g., state and local response entities) into a Knowledge Graph (KG)

- Aim 2: Use Large Language Models (LLMs) as User Interface to enable natural language queries and dynamic reasoning

Technical Approach

For this project, we integrate the diverse FEMA data sources (historical disaster declarations, assistance programs, deployment records) into a unified knowledge graph (KG). Our layered reasoning framework consisting of

- Knowledge Graph: Structures and interlinks FEMA data (events, locations, declarations, programs)

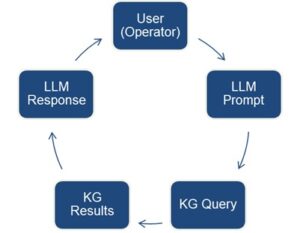

- Large Language Model (LLM) Interface: question interpretation, query generation, and response summarization

Closed-loop data cycle ensures continuously updated and context-rich answers

Project Members

Faculty: Dr. Karuna P Joshi

Students: Kelvin Echenim

CARTA Member Sponsors: DHS